Moosetracks

Member

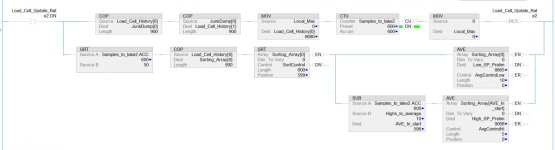

I am storing values into an array. I am wanting to find an average of the highs and an average of the lows.

Something like this...

After 50 samples average the 10 biggest and average the 10 smallest.

The number of samples starts at 0 and grows up to 600. I want to have these averages update with each new sample after we have collected 50 samples.

For me this screams structured text, but that isn't an option, and my original desire was the have the number to average a percentage of the number of samples collected, but the AVE instructions won't let me use a tag as the length. I suppose I could have a couple of different ave instructions with different lengths and then depending on the sample size use different ones. Hopefully that all makes sense.

After some trial and error this is what I have come up with. How would you guys do this? Is there anything wrong with what I have?

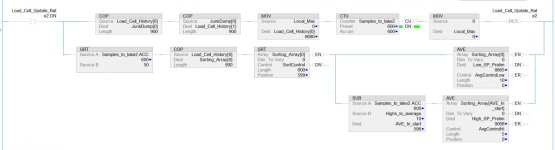

Something like this...

After 50 samples average the 10 biggest and average the 10 smallest.

The number of samples starts at 0 and grows up to 600. I want to have these averages update with each new sample after we have collected 50 samples.

For me this screams structured text, but that isn't an option, and my original desire was the have the number to average a percentage of the number of samples collected, but the AVE instructions won't let me use a tag as the length. I suppose I could have a couple of different ave instructions with different lengths and then depending on the sample size use different ones. Hopefully that all makes sense.

After some trial and error this is what I have come up with. How would you guys do this? Is there anything wrong with what I have?